Tool.BaseSqoopTool: specify -hive-import to apply them correctly or Note that 21/12/21 18:25:07 WARN tool.BaseSqoopTool: those arguments Tool.BaseSqoopTool: Without specifying parameter -hive-import. You can override 21/12/21 18:25:07 INFO tool.BaseSqoopTool:ĭelimiters with -fields-terminated-by, etc. 21/12/21ġ8:25:07 INFO tool.BaseSqoopTool: Using Hive-specific delimiters for

Dbvisualizer kerberos password#

The command I'm using sqoop import -connect -username system -password 'system' -m 1 -fetch-size 10 -table system.ADDRESS -append -columns "P_ADD","AREA","PIN","CT_ID" -create-hive-table -hive-table prod.address_hive -hive-importĢ1/12/21 18:25:07 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Set .=true ĭoes someone know any solution to this? We are using Spark version 2.4.6. We tried setting below properties in nf file, but still the issue persists. On checking we found that the HDFS files of the table are stored in multiple subdirectories like this - hive> CDPJobs]$ hdfs dfs -ls /its/cdp/refn/cot_tbl_cnt_hive/ĭrwxrwxr-x+ - hadoop hadoop 0 20:17 /its/cdp/refn/cot_tbl_cnt_hive/1ĭrwxrwxr-x+ - hadoop hadoop 0 20:17 /its/cdp/refn/cot_tbl_cnt_hive/10ĭrwxrwxr-x+ - hadoop hadoop 0 20:17 /its/cdp/refn/cot_tbl_cnt_hive/11ĭrwxrwxr-x+ - hadoop hadoop 0 20:17 /its/cdp/refn/cot_tbl_cnt_hive/12ĭrwxrwxr-x+ - hadoop hadoop 0 20:17 /its/cdp/refn/cot_tbl_cnt_hive/13ĭrwxrwxr-x+ - hadoop hadoop 0 20:17 /its/cdp/refn/cot_tbl_cnt_hive/14ĭrwxrwxr-x+ - hadoop hadoop 0 20:17 /its/cdp/refn/cot_tbl_cnt_hive/15 We are trying to read a hive table using Spark-SQL, but it is not displaying any record (giving 0 records in output). Using these URIs: return: Could not open client transport with JDBC Uri : Can't get Kerberos realm The database URL of the connection is: error returned when trying to connect is the following: Could not open client transport for any of the Server URI's in ZooKeeper: Can't get Kerberos realm

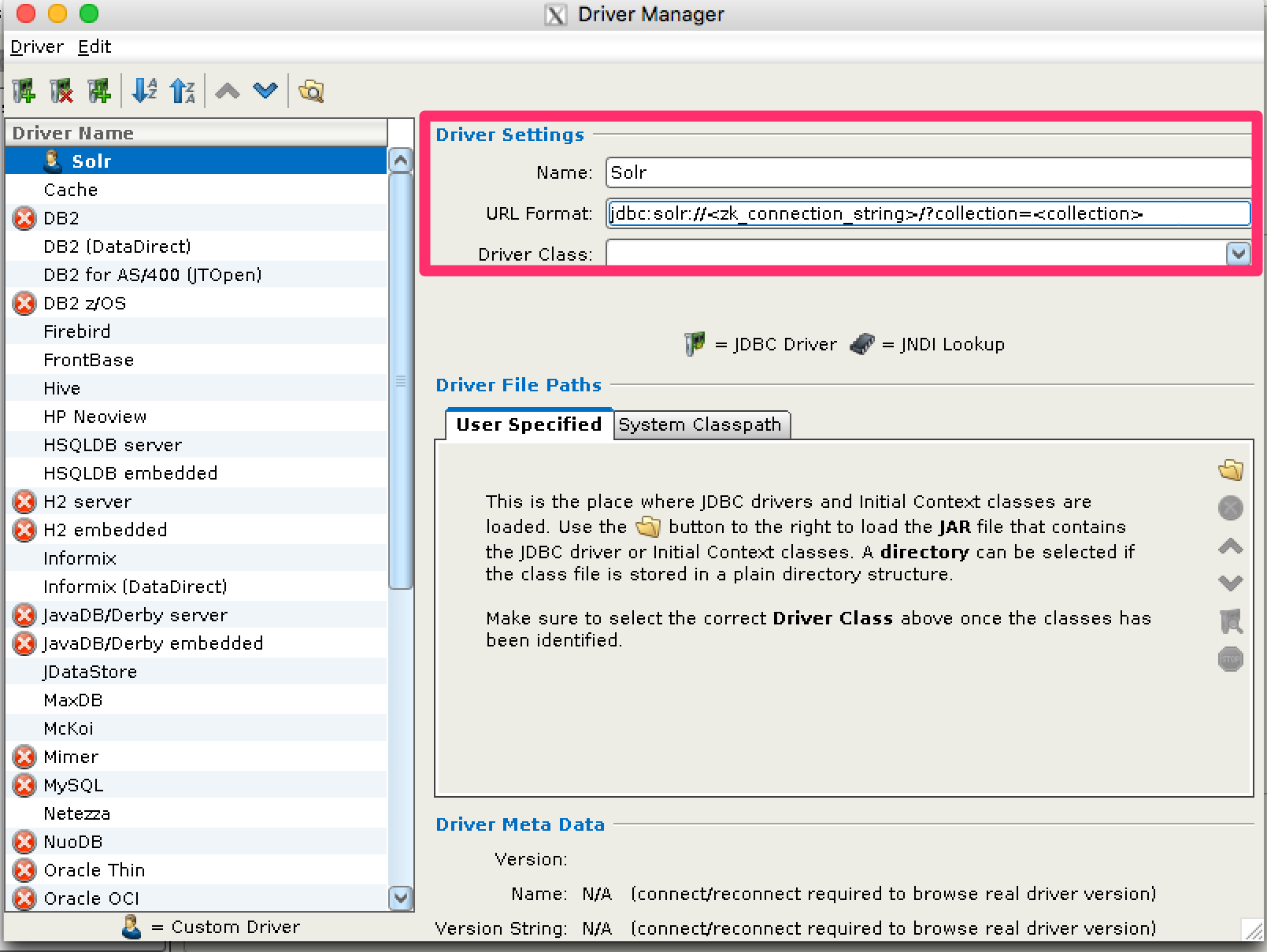

Dbvisualizer kerberos driver#

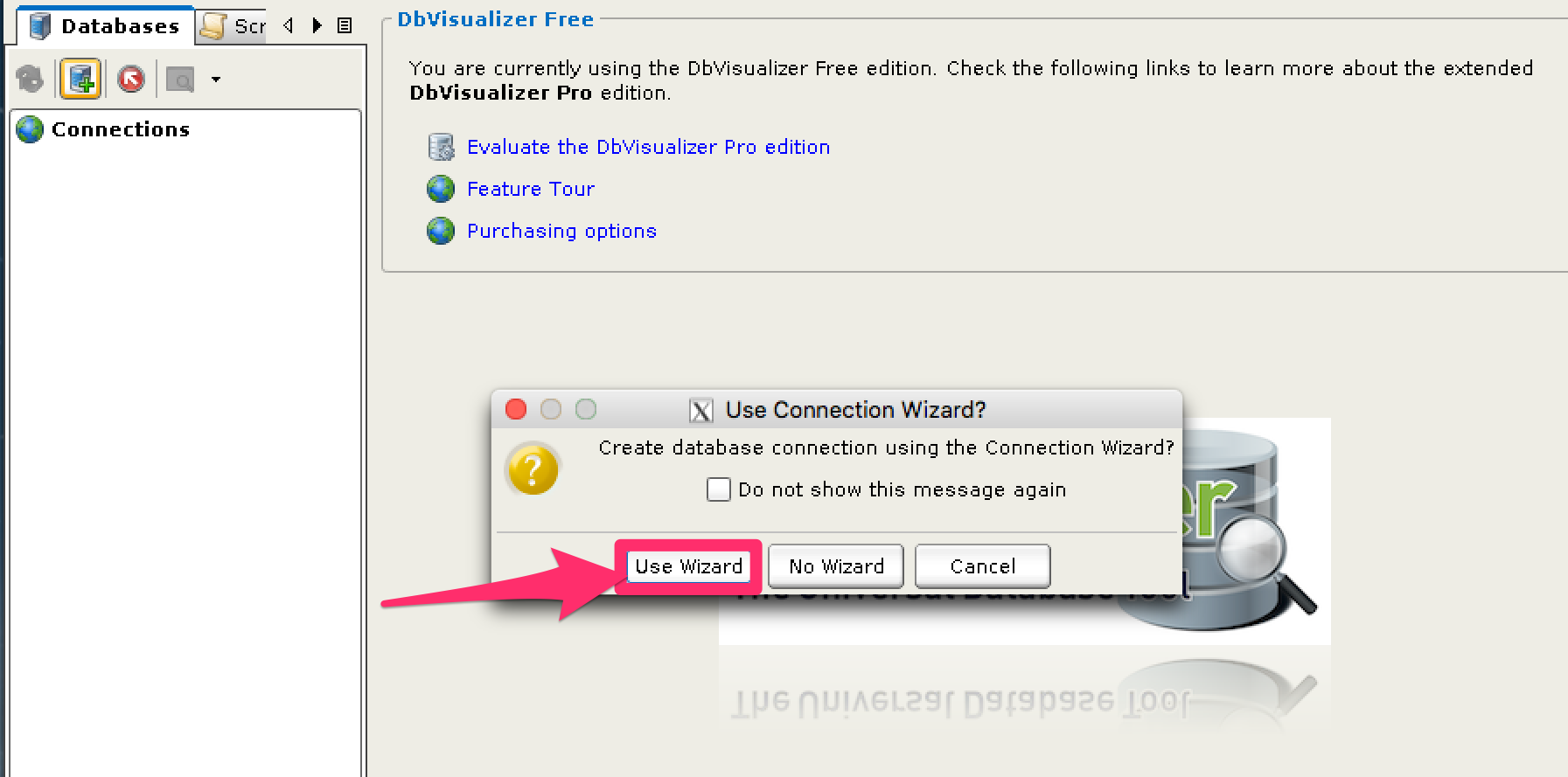

The JAR used for the driver is the one provided by the cluster in Ambari>Hive>JDBC Standalone jar Tools>Tool Properties>Specify overridden Java VM Properties here: =true I used kinit and here is the result of klist: etc]$ klistĭefault principal: starting Expires Service principal #default_tkt_enctypes = aes des3-cbc-sha1 rc4 des-cbc-md5 #default_tgs_enctypes = aes des3-cbc-sha1 rc4 des-cbc-md5 On the client, here is the /etc/nf (copy/paste from one of the cluster's machine): cat nf The client (where I am using DBVisualizer from) is a Centos 7 Machine. I am trying to connect to Hive with DBVisualizer, without success. And the defautl REALM ( default_realm) should be the one used for your clusterġ0.We have a HDP (3.1.0) cluster with Hive (3.0.0.3.1). The krb5.ini file must be in c:/WindowsĨ. The kinit must be done with the java used by DbVisualizerħ. Create a environment varaible KRB5CCNAME = c:\tmp\CCCACHEĥ.

Add the following parameter to DbVisualizer in DBViz Tools > Tools Properties > General > Specify overriden Java VM properties =falseĤ. Kerberos server must be reachable through the proxyģ. The thriftserver but be reachable through the proxyĢ. Your thrift server may be SparkThriftServer or Hiveserver2 Prerequisitesġ.

Connecting DbVisualizer and DataGrip to Hive with Kerberos enabled

0 kommentar(er)

0 kommentar(er)